Do Wider Neural Networks Really Help Adversarial Robustness?

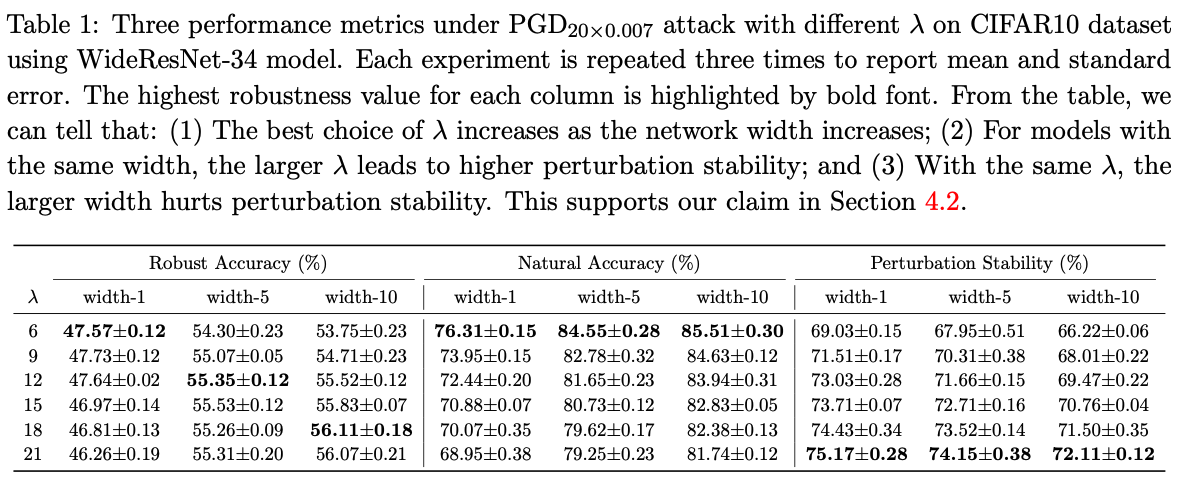

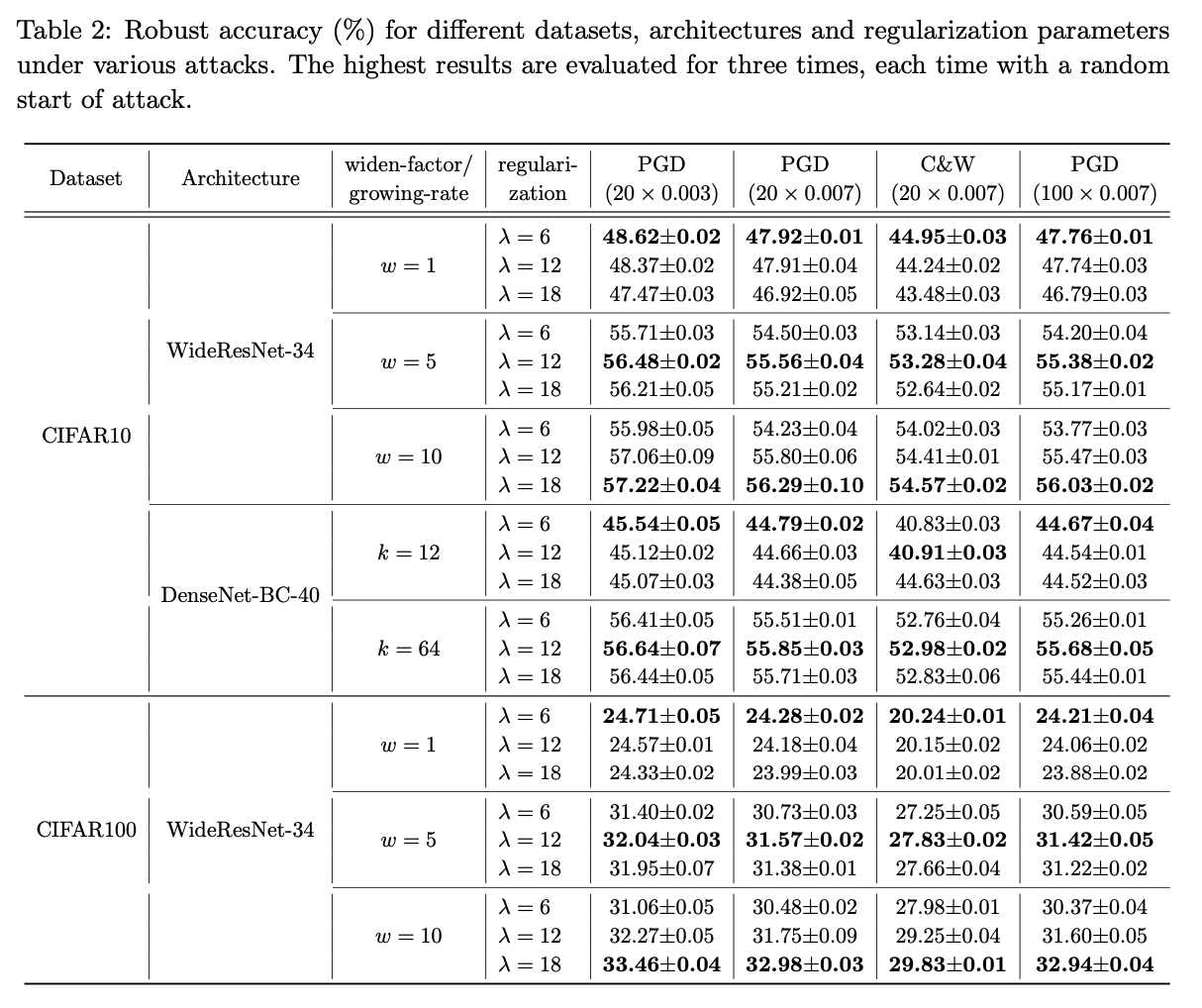

The author's associated resistance to matrix perturbations with the local Lipschitz characteristic of the neural network. It follows from this theory that broader models (such as WideResNet) are less stable to matrix perturbations than narrower ones. To remedy the situation, it is worth increasing the stability parameter λ in the process of adversarial training. Also, they showed the results of the dependence of the network width and the stability parameter λ on the CIFAR10 and CIFAR100 datasets.

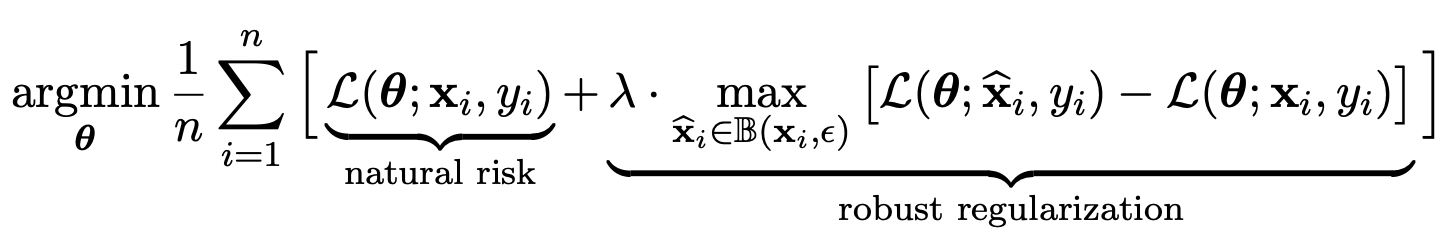

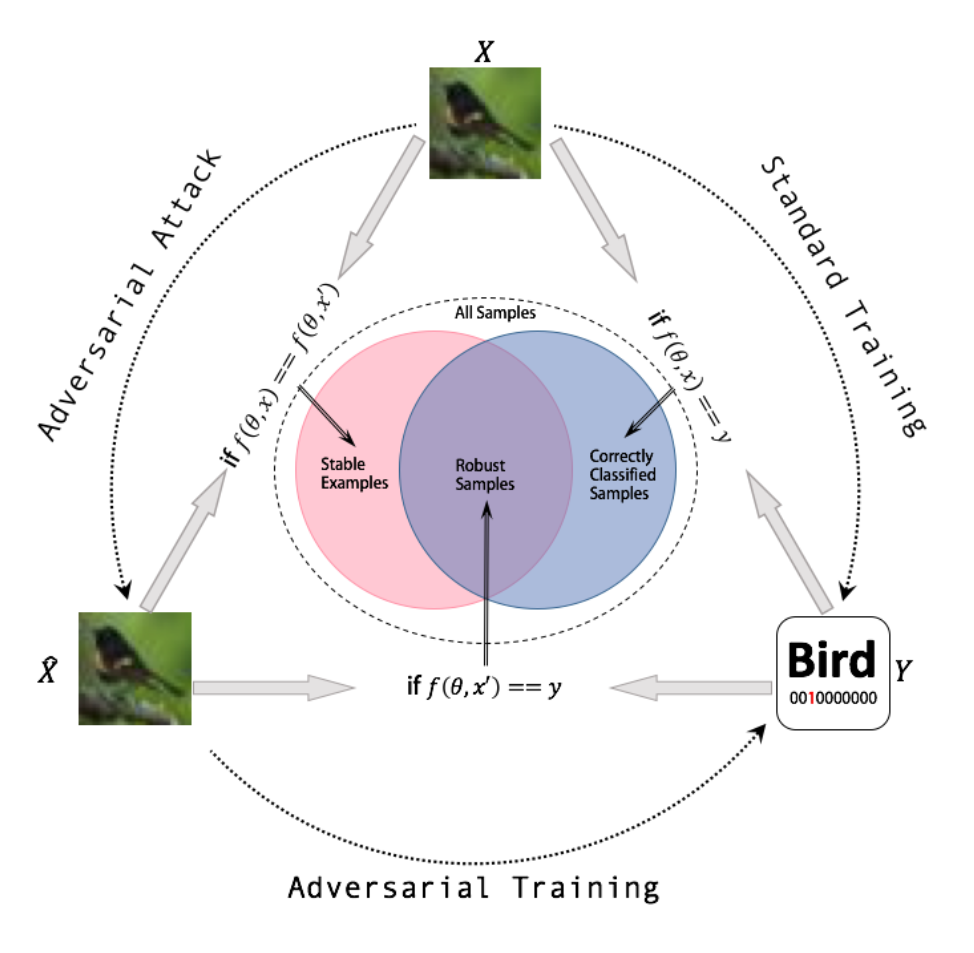

Adversarial Training

A form of data augmentation, in which a dataset of adversarial samples is first collected (samples whose distance from real images does not go beyond the specified value ε in Lp norm), and then the model is trained on a combination of original and adversarial data as in the formula below:

Empirical Research

Robust accuracy is the main metric for assessing network stability, which measures the ratio of correctly classified samples after an attack.

Natural accuracy - the usual accuracy on samples before the attack.

Perturbation stability is a new metric that measures the percentage of samples whose labels do not change after an attack.

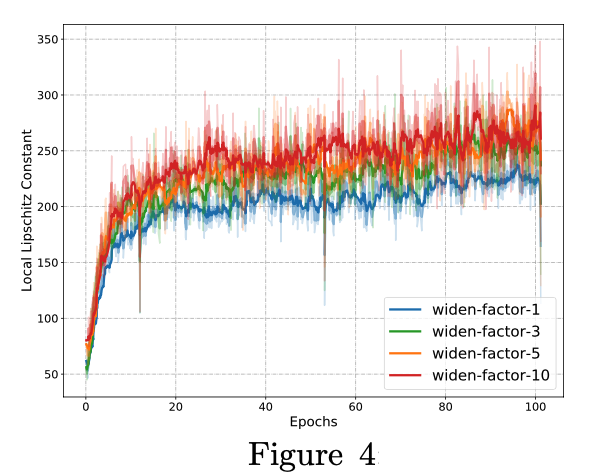

As can be seen in the graph (Figure 3), the metric of resilience to changes decreases monotonically with increasing model width. This suggests that broader models are actually more vulnerable to matrix changes.

Local Lipschitzness and Model Width

Many authors associate local Lipschitzness (Lemma 4.1) with network stability to matrix perturbations, suggesting that lower local Lipschitzness leads to more robust models.

In this article, the authors have shown that local Lipschitzness increases with increasing model width (Figure 4). More precisely, the local Lipschitzness is scaled as the square root of the model width (Lemma 4.2).

Experiments

It is known from past sections that wider models are less resistant to perturbation stability. One of the ways to improve stability is to increase the parameter that is responsible for network stability λ.

The main experiments were carried out on the CIFAR10 dataset using WideResNet with different widths (1, 5, 10). The batch size is 128, the number of epochs is 100. LR is 0.1 and is halved at each epoch, after the 75th epoch.

The best robust accuracy for a model of width 1 is obtained using λ = 6. For width 5: λ = 12; for 10: λ = 18.

The results are summarized in Tables 1 and 2.