A Closer Look at Accuracy vs. Robustness

Modern methods for training robust neural networks lead to a deterioration in the accuracy of test data. Therefore, past research has assumed that a trade-off between reliability and accuracy may be inevitable.

This paper shows that most datasets are r-separable - examples from different classes are at least 2r apart in pixel space. This r-division is valid for r values that exceed the radius of the matrix perturbations ε. Therefore, there is a classifier that is both accurate and robust to matrix perturbations in the size of r.

R-separability of Datasets

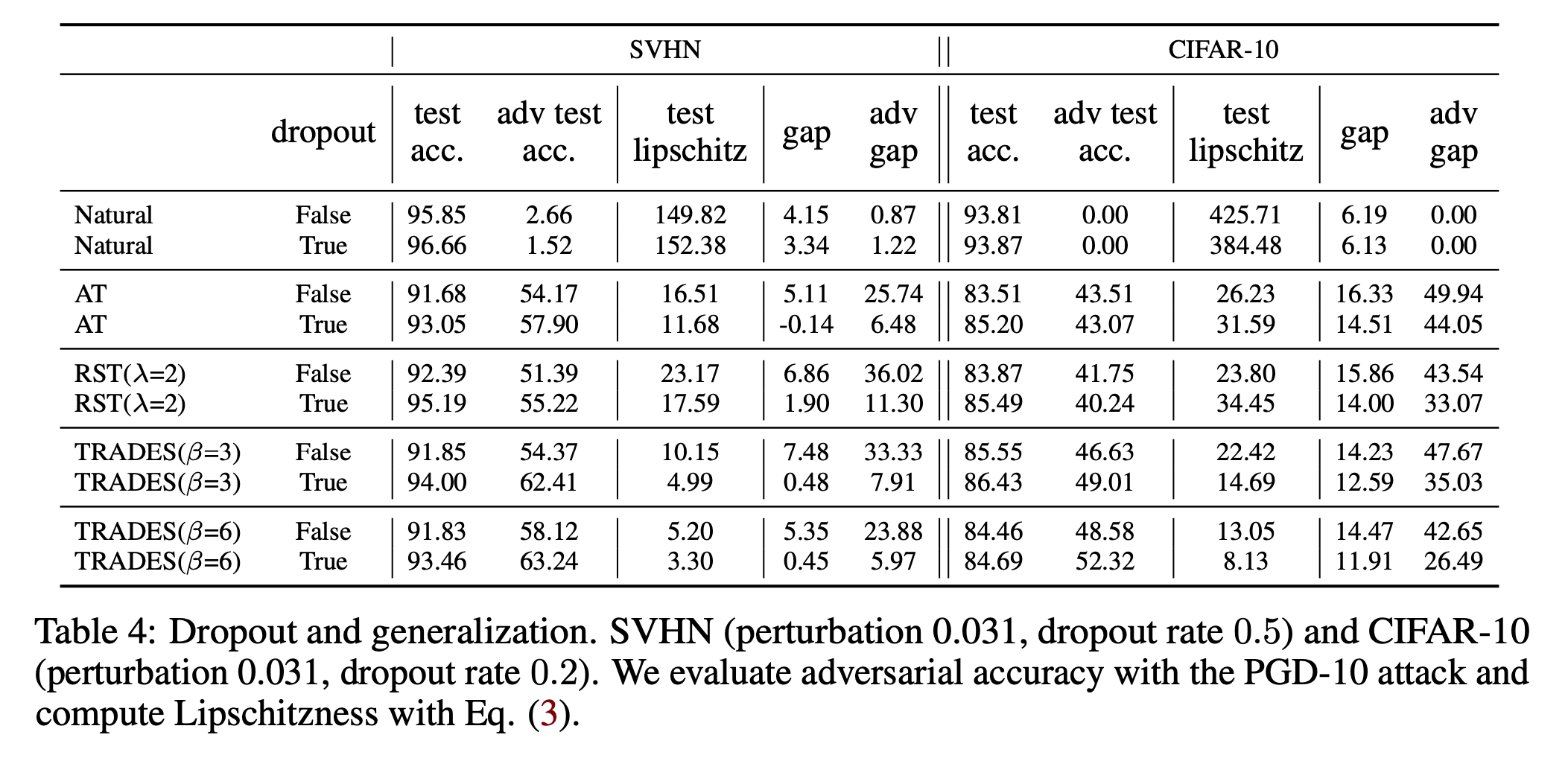

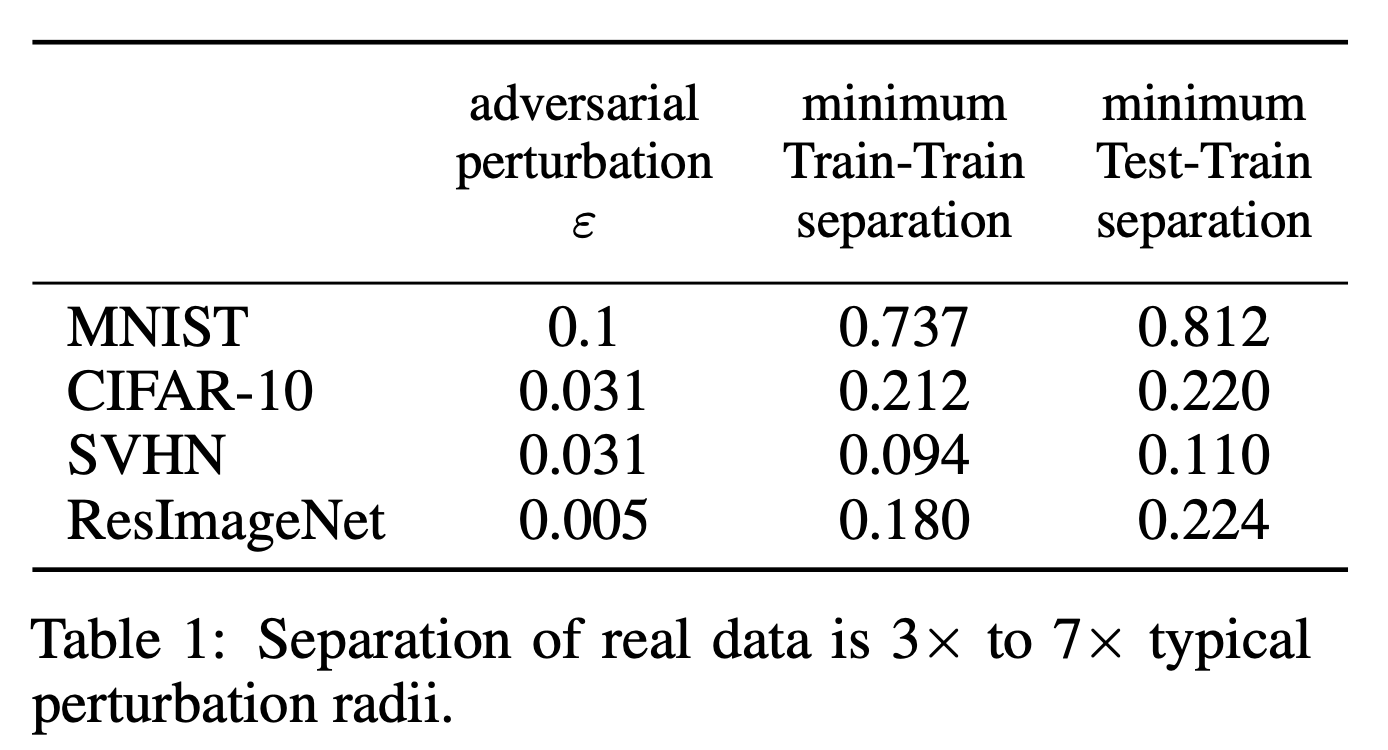

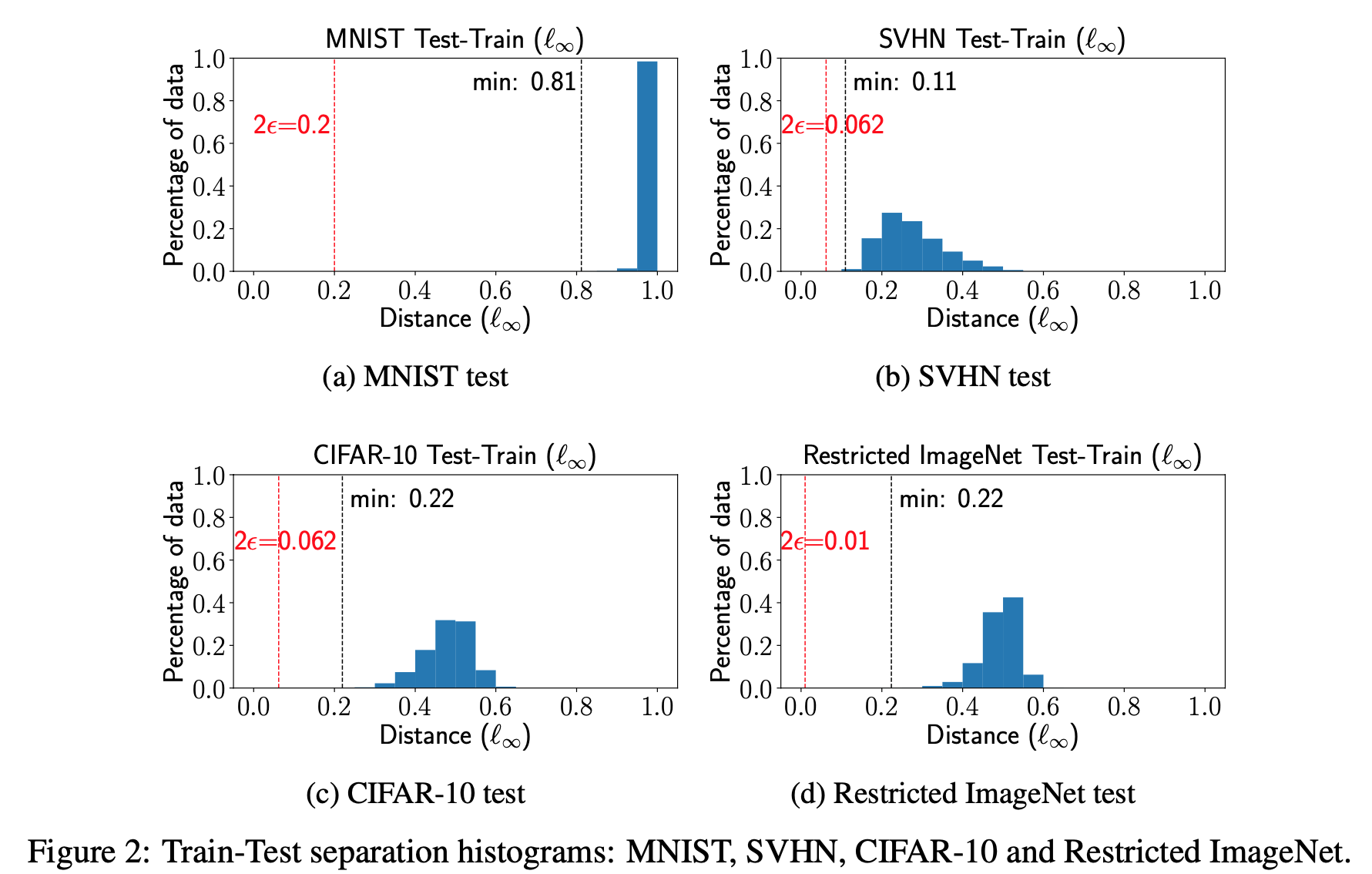

The article covers the MNIST, CIFAR-10, SVHN, and Restricted ImageNet datasets. All of them have separability greater than 2ε. Table 1 shows statistics of the distances between samples from the training data and their nearest neighbors from other classes in the Linf norm. Figure 2 shows histograms of the separability of the train and test data.

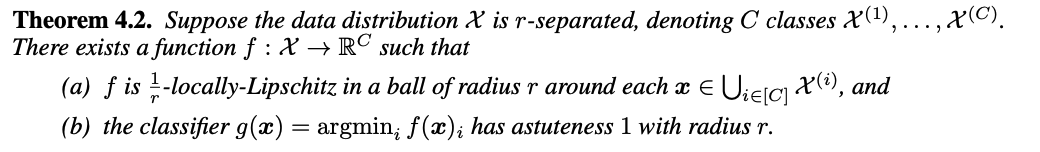

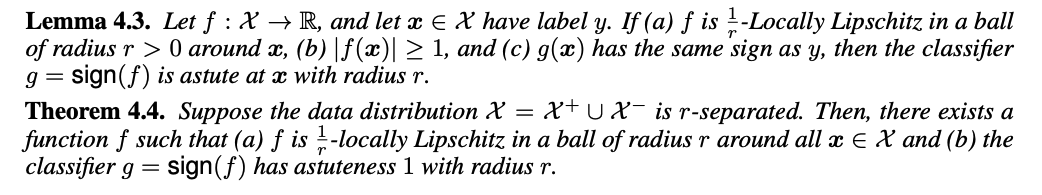

Accuracy and Stability to Matrix Perturbations for r-separable Data

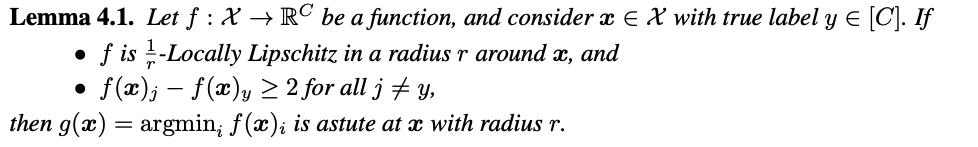

It is theoretically proven that with r-separability of data, there exists a classifier based on a locally Lipschitz function, which has a robust accuracy 1 with radius r.

Existing Methods of Training Models

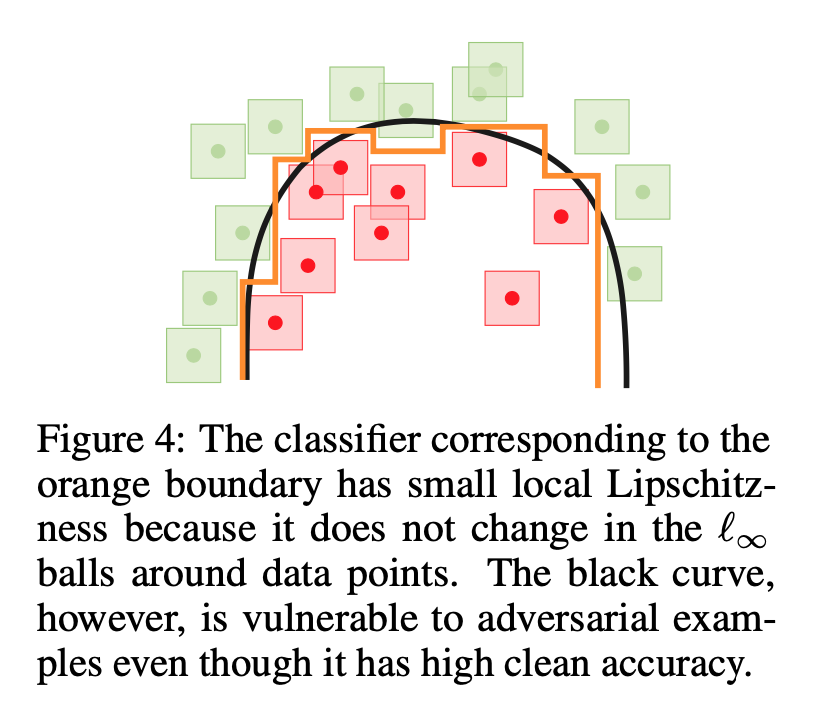

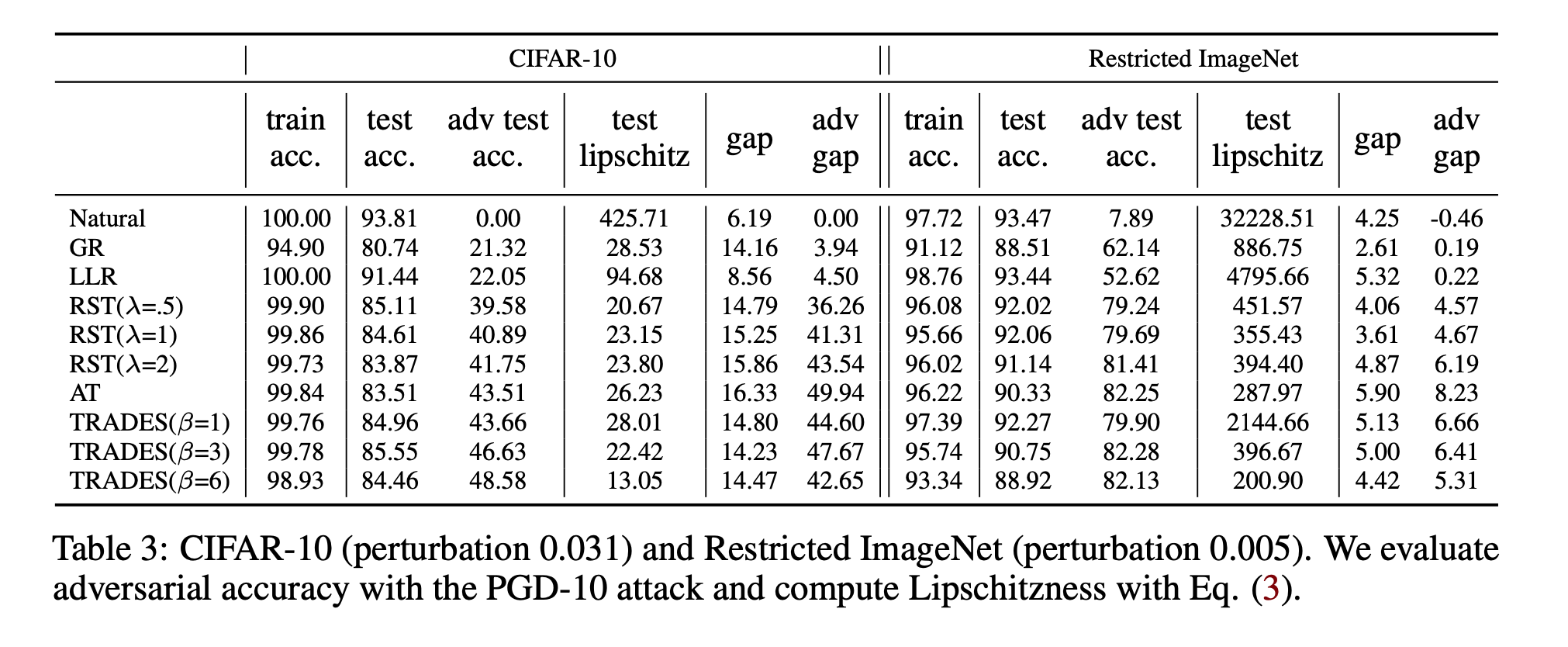

The experimental part of the article compares different training methods (gradient regularization, competitive training, TRADES, Robust Self Training, and others) that are attacked by projected gradient descent and multipurpose attack. Tables 2 and 3 show basic statistics.

The tables show that the methods of competitive training (AT), stable self-learning (RST), and TRADES are more stable than others. Local Lipschitzness is most correlated with adversarial accuracy. More reliable methods assume a higher degree of local Lipschitzness, but there are cases when the accuracy on test data decreases, although the value of local Lipschitzness continues to decrease.

It is worth noting that the methods that train locally Lipschitz classifiers have generalization gaps: a large difference in accuracy on training and test data, and even more for training and attack-prone test data. The authors managed to reduce the difference in accuracy by adding a dropout. This combination of dropout and stable training methods improves conventional accuracy, adversarial accuracy, and local Lipschitzness.